Welp, I zoned out for another year. Luckily I’m pretty sure no one reads this stuff, except maybe CS 186 students who want to investigate the TA who answers debugging questions at 4 AM instead of teaching a discussion section. Which brings us to today’s topic!

Teaching, and other paradoxes

Disclaimer: these aren’t going to be real paradoxes as much as they are catch-22s or surprising results a la Simpson’s paradox, although I did leave a real paradox in my bio on the class website. For background, from spring 2020 to spring 2021 I TA’d for CS 186, Berkeley’s databases course of roughly 600 students per semester. Starting in fall 2020 I was a head TA, which is a fancy way to say I answered more emails and worked extra hours.

An important piece of context is that the course’s assignments are a term-long project implementing various components of a database: query optimizer, indices, concurrency control, etc… Three of my main goals as a TA were:

- Decrease friction on the student’s end. A bit of a conflicting goal to go for since falling into debugging session rabbit holes or stumbling waist-deep in a flawed design are important things to be aware of when writing software. But, the primary goal of the assignments was to help students grok the algorithms presented in lecture, and getting caught in the weeds isn’t conducive to that goal.

- Help students help themselves. There are a lot of students compared to TAs, and that ratio isn’t getting any better thanks to budget cuts. On top of that, the pandemic made it difficult for students in distant time zones to get help directly. So a nice way to alleviate both issues was to make it easier for students to work out problems on their own.

- Reduce TA workload, again thanks to budget cuts.

While I think I did a pretty good job at hacking away toward these goals, there were a few counterintuitive observations made along the way.

1. Making things clearer leads to more confused students

Bugs and inconsistencies naturally crop up over time in any code, and the programming assignments are no exception. In the past, a large amount of time was spent on developing new assignments instead of fixing problems in the existing assignments. Or in the words of Vonnegut, “Everyone wants to build, but no one wants to maintain.” Eventually, we shifted towards improving the existing the assignments by:

- Adjusting the given APIs to be less foot-shooty

- Adding tips in the documentation and specification with advice for common errors

- Leaving in more structure in the provided code

- Introducing very, very detailed error output on unit tests

So, after three semesters’ worth of these changes, the amount of confusion surely decreased? Sort of. Overall, projects went a lot smoother – there were significantly fewer questions on Piazza, the Q&A platform for the class. Survey results showed a drop in complaints about workload and median time spent on assignments went down. But the amount of confusion in office hours became worse. Huh, weird. This was almost certainly due to sampling bias. Consider the two types of students who might come to office hours for help on a programming assignment:

- A student who understands the material well, but is confused by poor documentation, confusing APIs, or ambiguous specifications

- A student who is confused about the material, and subsequently extra confused about the assignment.

Type 1 students used to make up the majority of office hour tickets and were the kind many of the improvements were targeted towards. And ultimately, helping those students worked. Even when Type 1 students came in it was usually just a matter of pointing out parts of the documentation or the material to re-review – easy!

Helping Type 2 is a bit more involved since confusion about the assignments would usually be rooted in deeper misunderstandings about the concepts in the course. Rather than pointing towards material to re-review, fixing the problem would require an ad hoc lesson, followed by a code review to fix anything that was written on false assumptions, all before even getting to the original bug! By cutting down on Type 1 students, the observed proportion of Type 2 students increased, creating the illusion of students being more confused.

2. Removing 60% of students would cut wait times by… 0%

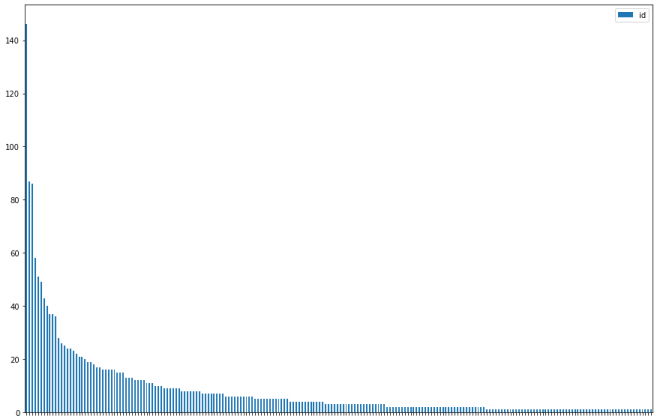

One of the “quality of life” metrics for a course is how long someone has to wait to get help. During the pandemic, it became pretty easy to measure thanks to the introduction of an online ticket queuing system. The online system also made it easier to analyze information about where tickets were coming from:

The above graph shows the number of tickets per student in descending order and is a classic zipf distribution. Ultimately we found that semester that the top 1% of students accounted for 35% of the tickets while the bottom 80% accounted for about 7.5%, with almost 60% of students never filing a ticket at any point.

3. The duality of man

Many of the changes I introduced as a TA were aimed at making the projects Less Bad™, which oftentimes included changes that closed off certain pitfalls at the expense of freedom in design choice. These weren’t really changes I was happy with – my favorite assignments at Cal were the open-ended ones, and when I took the course I never ran into any severe design blocks. But, anonymous feedback made it pretty clear that a non-trivial portion of the class was getting caught in design ruts, and caused many to give up on assignments prematurely or skip them outright. It could be argued that these are acceptable losses, but to me, it was preferable to sacrifice some difficulty for higher completion rates – difficulty is secondary to the main goal of having students apply the material.

While historically feedback on assignments was skewed towards “too difficult! make it easier,” by my third semester of teaching we hit about 90% “too hard!” and 10% “too easy” – it would be tricky to please everyone. A few ways to do it would be:

- Have students work out mandatory design docs before programming, and get a review from TAs. This is a bit foiled though by our already stretched staff allocation.

- Have open-ended extra credit assignments that do allow for more student creativity. This is in the works, probably, since it’s on the list of cans I’ve kicked to future generations of TAs.

Even with those changes though, I imagine we’d still be somewhere where complaints come from both ends of the spectrum. Which is a good spot to be in! A past professor based their lecture pace at the point when complaints about being too fast and too slow hit equilibrium. Not being able to satisfy everyone is hardly a paradox, just a fact of life. The real paradox here is a piece of feedback received that argued that “the projects handhold too much!” and later concluded with “the projects are too open-ended.” You can never satisfy everyone, and in some cases, you can’t even satisfy one!

4. Good news is no news

This last observation is less of a “paradox” and more of just a quirk with how feedback is viewed, but one that I think is important for other educators who might fall in the same trap. A large part of what I think makes CS 186 a Not Too Bad™ course is that we rely heavily on student feedback. A common theme I noticed when receiving feedback is that we tended to focus on the negatives – our job is to fix things that aren’t working, and positive feedback didn’t provide any hints towards that end.

On the other side of the spectrum, many of the biggest improvements will likely never receive much acknowledgment. Since most improvements are based on feedback after an assignment is submitted, only students in future iterations of the class get to enjoy the fixes without ever knowing of the original problems. Rarely does anyone ever notice the absence of a bug and likewise, it’s easy to miss the absence of once common complaints. In other words, when you do things right, people (including yourself) won’t be sure you’ve done anything at all.

Related Reading

- A Berkeley View of Teaching CS at Scale: In case any of the following thoughts crossed your mind while reading this:

- Undergrad TAs?

- Head TAs??

- 600 students???

- Induced demand: I didn’t include this in the list because there isn’t a good way to measure its effect, but I suspect that even if we continued to make the assignments easier, the effect on how many students need help wouldn’t decrease proportionally. That is to say, students that might have avoided/dropped the class because of limited support availability and long office hours waits would remain enrolled, and so the amount of support needed would stay roughly the same. A class’s reputation for difficulty is a surprisingly big factor in who takes it!

And if you’re in the mood to doom scroll:

- Teaching is a slow process of becoming everything you hate: A very relateable read (although I did genuinely enjoy teaching!)

- My experience as a Unit-18 Berkeley Lecturer