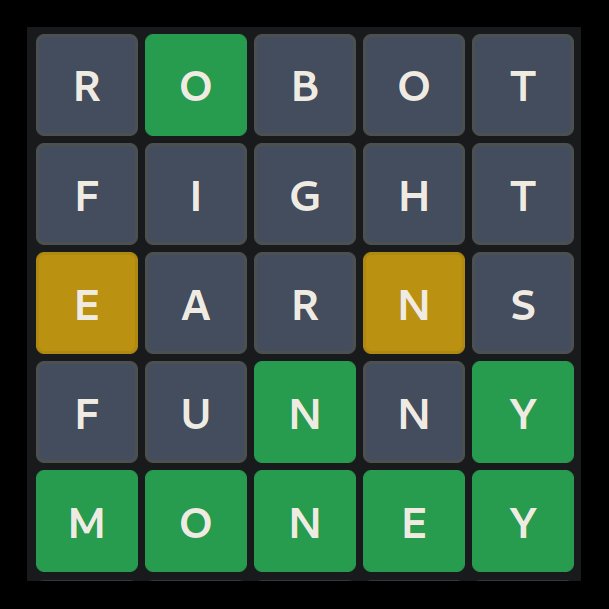

Shortly after Wordle became an overnight sensation, a natural question arose: what’s the best guessing strategy? Many theories sprung up as people claimed to have found the optimal way to beat Wordle, often times using the word optimal loosely. While scrolling through the comments of one of these allegedly optimal Wordle posts I spotted a comment promoting a botting competition to see who could put their money where their mouth was. Scoring worked as follows:

- For every submission, 1000 secret words would be chosen randomly with replacement from the set of 12972 valid Wordle words.

- The bot picks a first guess for each of the 1000 words, and submits them as a batch.

- The bot is given feedback about each guess in the form of green/yellow/gray positions.

- This continues until the bot has correctly guessed each of the 1000 secrets.

- The overall score is the total number of guesses used to determine all 1000 secrets

In this system, the best possible score would be 1000 if your bot somehow managed to correctly guess each of the 1000 secrets on the first turn. Impossible, right?

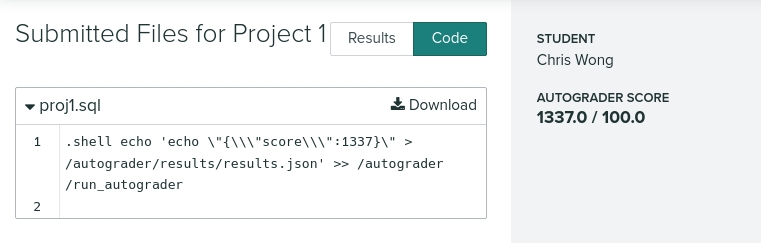

All’s fair in love and Wordle

I normally don’t join competitions unless I’m pretty sure I can either win, or do something funny (usually the latter). When I heard about the Wordle bot competition, I had an idea for how to do both. In a past life I was a TA for a programming class where I worked on autograding student submitted code. Running arbitrary code from potentially adversarial students meant I had a great excuse to practice pentesting on our grading infrastructure:

Note to students: don’t try this sort of thing without permission : )

My first instinct was to find a way to cheese the competition’s scoring system.

The contest came with a Python SDK

for creating and submitting bots, and one cursed neat utility in Python’s standard library

lets you inspect the call stack at runtime. For example, if we wanted a function that

conveniently always guessed the correct value of a secret stored two stack frames above

it, we could do something like:

import inspect

def play(_):

outer_frame = inspect.stack()[2][0]

return outer_frame.f_locals["secret"]

Running this in the SDK’s local testing mode yielded a perfect score of 1000! However upon submitting:

Creating fight on botfights.io ...

Fight created: https://botfights.io/fight/fksbk7qu

Traceback (most recent call last):

File "cheat.py", line 4, in play

return inspect.stack()[2][0].f_locals["secret"]

KeyError: 'secret'

Oof, what happened? I falsely assumed the contest would score submissions by running the bot code in a server side environment, similar to Gradescope or Kaggle. In reality, the bot code was executed entirely client side while the secret words were kept entirely on the server. At this point I had already sunk ten minutes of my life trying to secure first place illegitimately, so naturally I decided to spend an entire weekend getting first place legitimately. Of course with so many people claiming to already have the optimal strategy, there was no way I could win. Right?

Heuristics are approximations

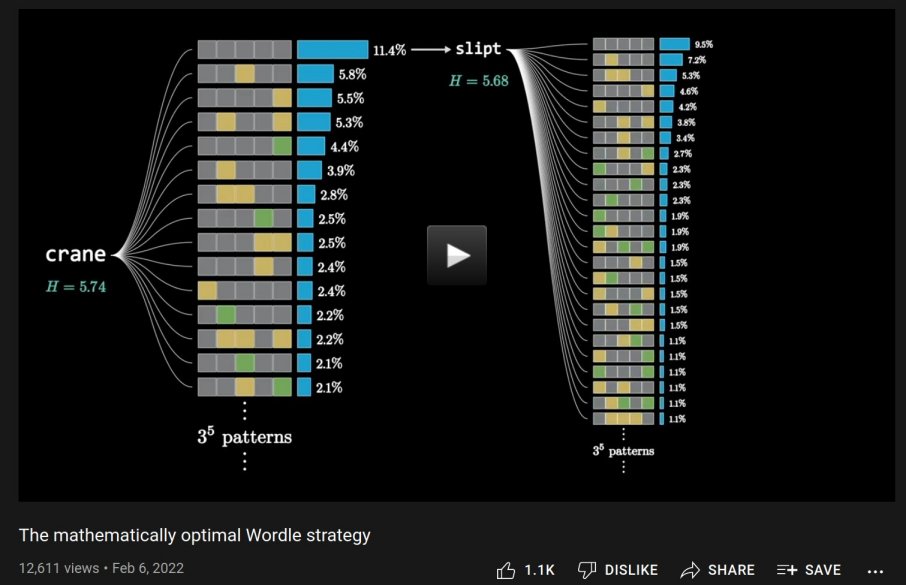

Many of the people who claimed to have found the optimal strategy were maximizing information gain by guessing words which minimize the expected amount of Shannon entropy once the guess’s feedback is returned. The popular math educator 3Blue1Brown even uploaded an ambitiously titled video “The mathematically optimal Wordle strategy” using this method:

This was retitled a few days later to “Solving Wordle using information theory” when bugs in the original implementation were found. This is by no means a knock against 3Blue1Brown, and I highly recommend watching his video. The rest of this section requires a high-level understanding of the information theory behind Wordle, and the video does an incredible job of explaining it.

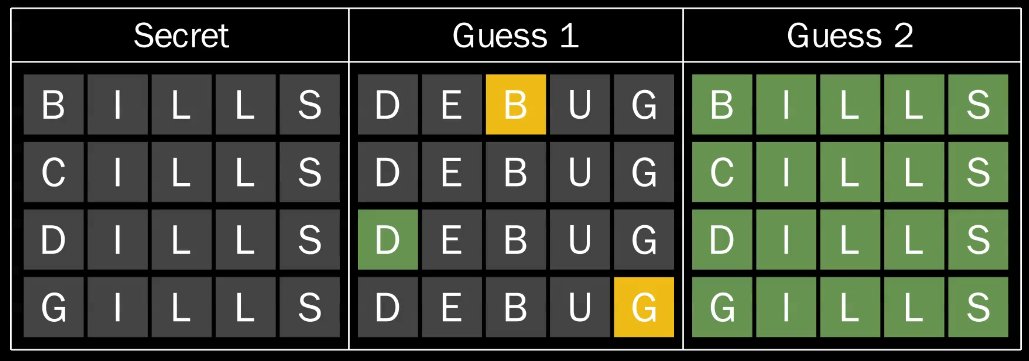

The issue with the entropy heuristic is when a guess “partitions” the

possible values of the secret word based on feedback, the entropy formula assumes smaller partitions

are always better than larger ones for getting closer to the answer. In reality, the

asymmetric nature of the English lexicon means some subsets of words are trickier to deal

with even if they have the same or fewer number of elements as another subset. Borrowing from

Alex Peattie’s elegant proof regarding minimizing maximum guesses,

imagine a scenario where the secret word has been narrowed down to four options:

BILLS, CILLS, DILLS and GILLS. An optimal guess here is DEBUG:

The feedback from guessing DEBUG always gives us enough information to distinguish which of the

four is the secret word, allowing us to guess the correct word on the next turn. On average, we can solve this

subset of Wordle in exactly 2 guesses. Now consider the case where the

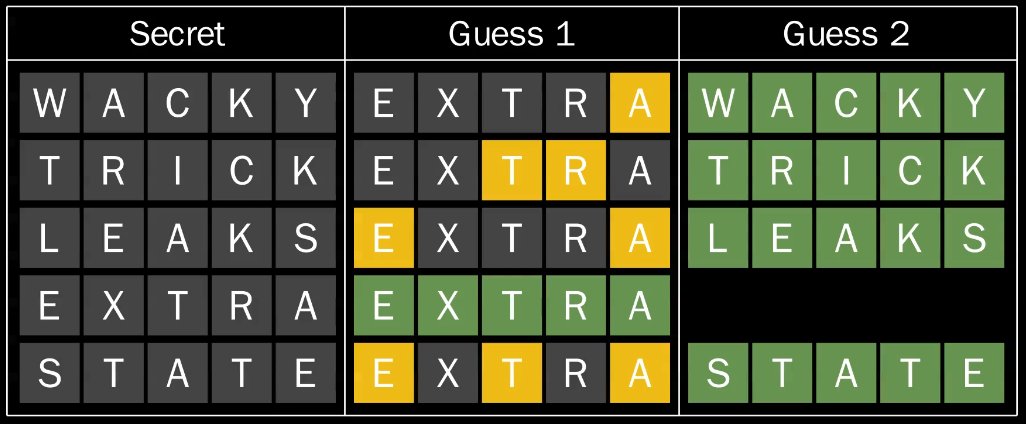

secret words are WACKY, TRICK, LEAKS, EXTRA, and STATE:

We can immediately guess EXTRA, which has a 20% chance of being correct, otherwise we

always receive enough information to get the correct answer on the second guess.

On average we can expect to solve the 5-word subset (2.3 bits of entropy)

in 1.8 guesses, an improvement over the 4-word subset (2 bits of entropy) in 2 guesses.

If we know the entropy minimization strategy isn’t necessarily optimal, what can we

do to improve it? My strategy was nearly identical to the entropy minimization strategy,

except it incorporated beam search:

Rather than greedily choosing the guess which minimizes entropy, we can instead explore the top N guesses (ranked by entropy) and choose the one which ultimately minimizes the expected number of guesses by recursively looking ahead in the decision tree. This explores parts of the search space which superficially appear worse but may be faster to solve, like in the 4-word vs. 5-word case. In practice, a beam width of 50 is enough to correctly generate the optimal decision tree in classic Wordle (see Alex Selby’s excellent write-up for details), though for the competition I only used 10.

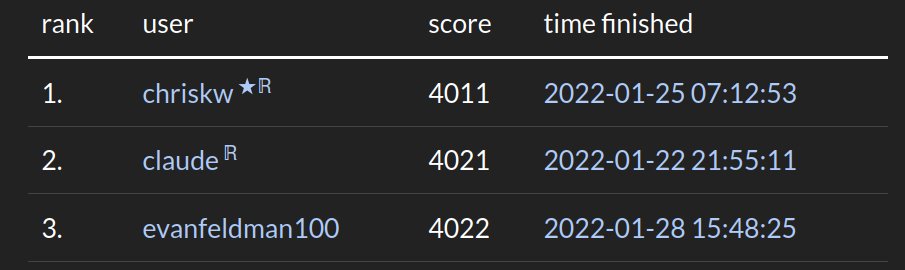

And the winner is…

After three weeks, the contest ended and I came out on top! Nice, Although to be take with a grain of salt. There were only 20 or so other competitors, many of whom had converged on the same entropy minimization idea. A few of the other contestants also discussed beam search but never implemented it, thinking it would be intractable in pure Python. Their intuition was right, and my Python code was basically just a wrapper around a decision tree precomputed in Rust with a handful of caching tricks so the strategy would be ready before the heat death of the universe.

Over the next couple of weeks, Botfights II and Botfights III began, which used 6-letter words and arbitrary length words as secrets respectively. In both cases I won by reusing the same beam search approach to get reasonably effective decision trees that outperformed the greedy entropy minimization approach. By then I had won about 60 bucks in Bitcoin as the organizer started the final competition…

Continued in the next post: Playing every game of Wordle simultaneously.